The main job of the transport layer is to hide the complexity of the network from the upper layers, application presentation and session. So, application developers can build their applications without thinking about how they are going to deal with the network. This provides more transparency, and more independence of deployment, and development of components in the IP protocol stack.

Two protocols are available at the transport layer: UDP, or User Datagram Protocol, and TCP, or Transmission Control Protocol. Both perform session multiplexing, which is the primary, or one of the primary functions of the transport layer, and it means that a machine with multiple sessions or multiple applications can still use the same IP address to communicate with the network. Example: servers that offer web services and FTP services while still having the same IP address. Another function is segmentation, which will prepare application layer, units of information, and break them into segments that will then be fit into packets to be sent across the network. Optionally, the transport layer will deal with making sure that those packets get to the other side, and all of the reliability and flow control mechanisms, to ensure that that happens. This is optional because only TCP provides that service as a connection-oriented protocol. UDP is a connectionless protocol, and it can be used when speed is the main issue, and providing flow control, reliability, and that sort of mechanism would slow down the connection.

So, we can quickly compare the two options at the transport layer. TCP being connection oriented, is a reliable protocol. It provides mechanisms, like sequencing of packets, that result in sequence numbers for each packet and then a reassembly of all of the packets in a sequence in such a way that they make sense on the other side. A whole scheme of timers and timeouts is also included to guarantee the delivery of packets. UDP, on the other hand, is a connectionless protocol and it does not provide any of the sequencing or other mechanisms to guarantee delivery. Again, the analogy would be a telephone conversation, which you have to establish the call before you send information or talk. This would be similar to TCP or a postal office mail delivery, which does not guarantee that delivery, and you simply send packets and hope that they will get there. Of course, someone else would need to take care of and deal with reliability in the case of UDP, and this is typically reserved for upper layers. Examples of use of each one are listed here: email, file sharing, downloading, voice signaling; those will use TCP as a reliable transport. Voice and video communications will benefit more from the decreased overhead of not providing reliability and resulting speed in delivering the packets. The assumption here is that those applications can live with a specific amount of packet loss, but will benefit more from the best-effort low overhead transport.

| Reliable | Best-Effort | |

|---|---|---|

| Protocol | TCP | UDP |

| Connection Type | Connection-oriented | Connectionless |

| Sequencing | Yes | No |

| Uses | E-mail File sharing Downloading |

Voice streaming Video streaming Real-time services |

UDP Characteristics

It is a connectionless protocol, and therefore it provides limited error checking, no data recovery features to recover from packet loss, and so it does not offer retransmissions of packets in and off itself. Best-effort delivery is the end result, and this also means that applications using UDP will benefit from the low overhead due to the fact that no reliability mechanisms are offered. The word “limited” in the error checking bullet means that there will be some error checking in the form of checksums that go along with the packet to verify integrity of those packets. There is also a pseudoheader, or small header that includes source and destination ports. And so, if the service is not running on a specific machine, then UDP will return an error message saying that the service is not available.

Overhead in a UDP header – source and destination ports identify the upper-layer applications that are being transported using UDP. The length of the payload and the header are included in that field, and also checksum to verify integrity.

TCP Characteristics

A connection-oriented protocol like TCP provides reliability, and error correction and detection, as well as guarantees of delivery. Because of that, it is a lot more complex than something like UDP. It will provide error checking in the form of checksums, but also sequence or add a sequence number to each packet so that the other end can verify that sequencing and look for missing pieces or packets. The TCP connection is like a walkie-talkie-type conversation. It will include acknowledgments that will tell each side that the other side received the information. Only after receiving the confirmation of delivery will i start sending the rest of the packets. It also has retransmission capabilities for data recovery. If there is a missing packet in transit, then each end can retransmit the same packet by looking at its sequence number, and then retransmitting the same information.

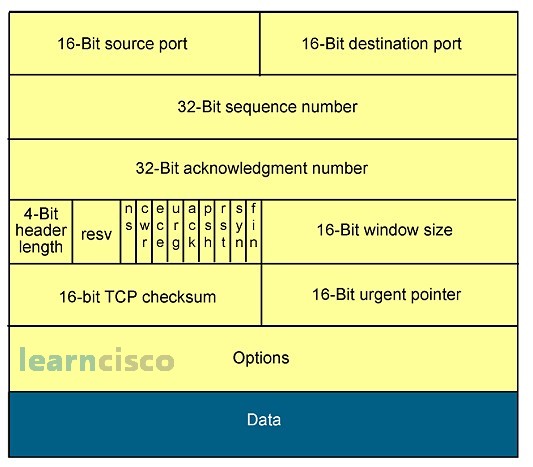

All of these overhead results not only on additional processes and protocols, like the manipulation of sequence numbers and the sliding windows protocol, but also they result in additional information that need to be included in the header. In TCP, not only are we seeing source and destination ports to identify upper layer applications, but we are also seeing sequencing numbers and acknowledgment numbers for confirmation of delivery. Window sizes will streamline the confirmation of multiple packets and several pieces of information at in one shot. The checksum will guarantee integrity of the transaction, and you can see different levels of delivery via the urgent pointers, options, and flags.

TCP/IP Application Layer Overview

The job of the transport layer is again to provide a transparent network and hide the complexity of that underlying network from upper-layer applications. Those applications can be built and developed to use either TCP or UDP depending on their needs, whether they need confirmation and a reliable network, or whether they need speed and lower overhead. The list of applications here is typical of Internet scenarios where we have FTP, TFTP, and NFS for file transfers; SMTP, but also a POP3 for email; different remote login applications; SNMP for network management; and domain name system as a support application to translate names into IP addresses.

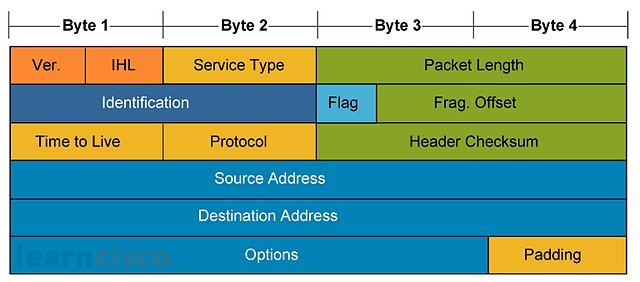

One of the keys to any layered model is the interaction or interfaces between layers. Layers 3 and 4 of OSI are no exception. And so, for example, if a certain device receives a packet from the network and processes it via the IP protocol at layer 3, it will require additional information to determine whether TCP or UDP will need to process the packet. In other words, which transport layer protocol will need to take it from there. IP uses the protocol field of the header to identify which transport layer protocol is to be used. So, for example, a number 6 in the protocol field will mean that TCP is the transport layer that should process the packet, whereas the 17 identifies UDP as the transport to process a packet.

Similarly, between transport layer and upper layers, TCP and UDP will require additional information to understand which application layer process should receive the appropriate segments. Enter port numbers, which are transport layer mechanism, to identify applications. It will be a field in the transport layer header that identifies those ports. In example, port 21 represents FTP, 23 Telnet 80 represents web-based applications in the form of HTTP protocols, 53 for DNS, 69 TFTP, and 161 for SNMP. Those numbers need to be unique and they are effectively assigned by the Internet Assigned Numbers Authority to make them unique. The well-known ports associated with applications are all below 1023. There are other ranges for registered but proprietary applications in that range. Even more ranges are available for dynamically negotiated ports.

Establishing a Connection

A reliable transport like TCP is responsible for establishing connections before packets are sent. This connection will serve the hosts on each side of the conversation to identify the particular session and hide the complexity of the network from that conversation. In other words, what the hosts see is a connection identifier and not the complex network underneath that conversation. Connections need to be established, maintained, and destroyed after they are finished, and this is the job of protocols like TCP.

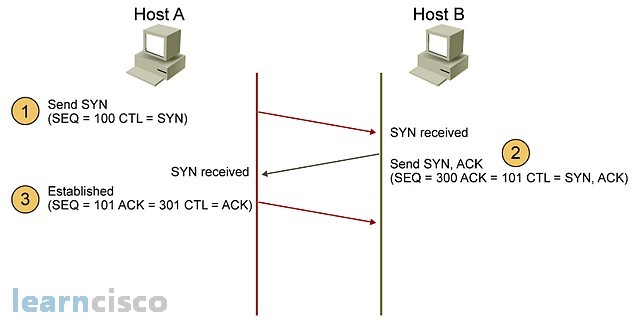

Connection establishment takes a form of what is known as a three-way handshake. This is a process of synchronizing the two machines and knowing that they are to be connected by TCP. The 3 way handshake uses specially crafted packets that use the control fields and the TCP header. Those control fields are identified by the keyword CTL in this diagram. It all starts with a send packet, with a certain sequence number. That control bit being used is obviously SYN. The packet is sent and the receiving end will process it and send what is known as a SYN acknowledgment, which will have the SYN flag and the acknowledgment flag on. It also uses the sequence numbers to acknowledge the next series of bits to be received. The connection is fully established when the final acknowledgment is sent by the sender. The control bit being used there is the acknowledgment flag only. So, this is similar to a telephone conversation in which we say, “Hello.” The other end says, “Hello I am here,” and the sender says, “Alright we are established. Let’s start talking.”

Flow Control

The flow control function of the transport layer and the protocols like TCP results in two distinct, but interrelated, functions and mechanisms. One of them is the acknowledgment of packets. Acknowledgments are nothing more than specially crafted packets that represent confirmation of the delivery by the receiving end. The sender will not send further information if it has not received an acknowledgment of previously sent information.

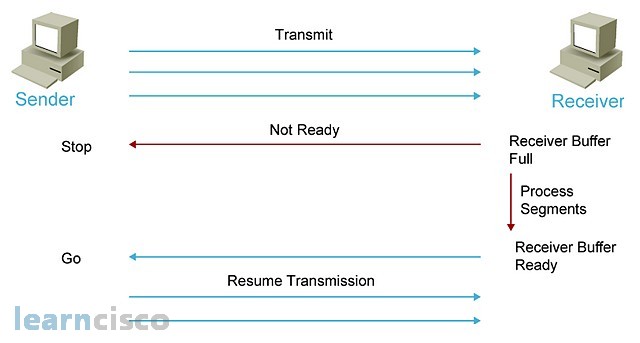

The second mechanism is windowing, which initially servers the purpose of acknowledging chunks of information, in other words, instead of acknowledging packet by packet. Let’s have the sender send series of packets or a certain amount of information and then confirm or acknowledge that particular chunk in one shot, instead of each piece by itself. However, windowing mechanisms also serve the purpose of full control, because when the receiving end sends a window size of zero, it means, “Hey, my buffers are full. I cannot process anything else, so please wait until I give you another signal.” When the receiving ends buffers are cleared and the machine can receive more packets, then it will resume transmission by sending a different window size. At point, the sender will initiate transmission again.

So the window size is nothing more than the amount of unacknowledged information that can be in transit. The sender is sending chunk number 1, and that chunk is defined as a number of bytes or kilobytes to be sent. The receiver will acknowledge that by specifying the next chunk that it is expecting. In other words, the receiver is not saying, “I am acknowledging chunk number 1 exactly.” It is saying, “I am acknowledging chunk number 1 by saying ‘send me chunk number two now.'” The sender will see that and send the specified chunk as seen on the acknowledgment. On and on it goes. In this example, the window size is 1, and so we are effectively acknowledging each chunk. And this could become cumbersome and complex, but also add more overhead into the network. More acknowledgments are needed to provide flow control and continue the conversation. It is important to understand that what I have been calling chunks are really segments at the transport layer, and they are typically in the form, again, of bytes or kilobytes to be transmitted.

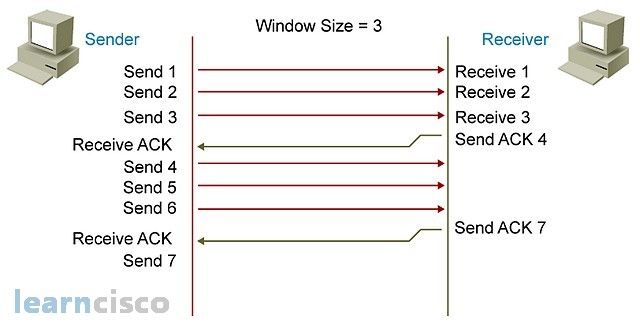

One acknowledgment per data segment not only sends more overhead into the network, but also slows down the conversation. It is similar to using the word “over” in our walkie-talkie analogy for each word that the sender says. “Hello over,” “How over,” “Are over,” “You over.” TCP includes a windowing mechanism, which allows it to increase the number of segments that need to be acknowledged. In that sense, it would be similar to saying, Hello, how are you,” and then acknowledging that with the word “Over’ in our walkie-talkie conversation. The segment numbers shown here are used for simplicity. The window size is really a number of bytes or kilobytes that are to be sent and acknowledged in one shot. And so, when sending the three segments here, the receiver will acknowledge by saying, “Send me number 4.” And so, it is acknowledging all three segments in one shot. Again, the real-life window size is in kilobytes, and so increasing window size would be similar to saying, “I was sending 64 kilobytes and now I am sending a 128 kilobytes and you can acknowledge all 128 instead of 64.”

A fixed window size could not be used for the sender and receivers to accommodate network congestion and adjust to that congestion. A dynamically negotiated window size, also known as sliding window, will allow you to adjust without congestion and serve as a full control mechanism.

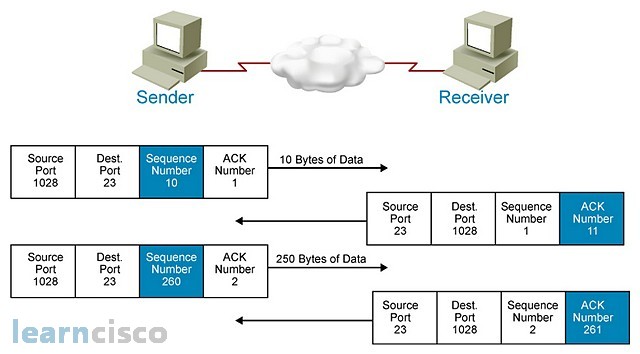

The flow control mechanism is completed with the use of sequence numbers and acknowledgment numbers. Notice in this slide how the sequence numbers are more realistically shown as the amount of bytes of data to be sent in each segment. So a sequence number of 10 means 10 bytes of data being sent, and an acknowledgment number of 11 means that they are acknowledging the first 10 bytes and are expecting the next segment after 10 bytes. The next exchange sequence number of 260 means that 250 bytes of data are being sent, so the sequence number is more of an offset that has a relationship to the beginning segment. Notice that senders and receivers know about this particular conversation and can relate to it as one connection based on the source port and destination ports being used. The source port is randomly generated at connection time and the destination port needs to be a well-known port identifying the particular application. In this example, we are using Telnet as the application.

Our Recommended Premium CCNA Training Resources

These are the best CCNA training resources online:

Click Here to get the Cisco CCNA Gold Bootcamp, the most comprehensive and highest rated CCNA course online with a 4.8 star rating from over 30,000 public reviews. I recommend this as your primary study source to learn all the topics on the exam.

Want to take your practice tests to the next level? AlphaPreps purpose-built Cisco test engine has the largest question bank, adaptive questions, and advanced reporting which tells you exactly when you are ready to pass the real exam. Click here for your free trial.